Returning my focus to the hands-on! Day 5

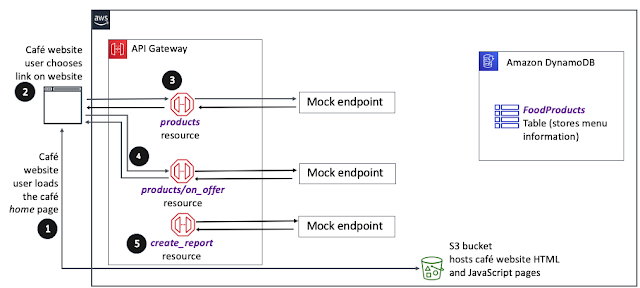

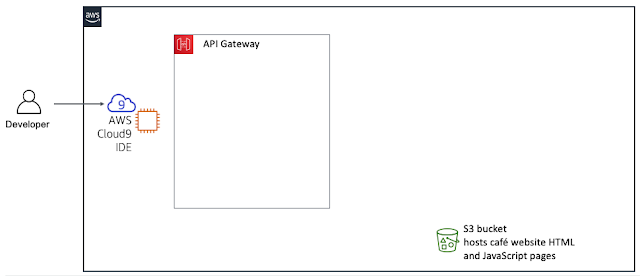

Really trying to work through the labs in the AWS re:Start graduate environment to move onto focusing on programming and some more complex bits on Udemy. Not to also mention, lots of the labs aren't working because of silly things like IAM permissions going awry, so just stuff like I'm not given permission to view objects in the S3 bucket - real shame. This lab involved creating an REST API with mock endpoints. I feel like this should have come prior to another lab I've done this plus connecting the API endpoints to an DB, but nevertheless it has helped me solidify my API understanding once again. It also gave me again exposure to AWS SDK for Python aka Boto3, which I'm starting to see is the core underpinning of how automated and serverless architecture is built in AWS. I am starting to feel really excited because the dots of everything I've learnt is starting to genuinely compound into some serious knowledge. It feels a little bit like learning to drive basicall