Returning my focus to the hands-on! Day 2

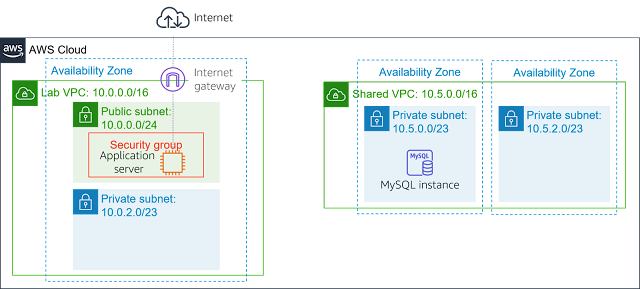

So just a quick little lab before I give my significant other a lift - creating a VPC peering connection. Unlike yesterday, no need to faff creating the infrastructure as it's prepared in the lab. Not much to say here except it at least it was the first time (to my recollection) setting this up.

Unfortunately like many pre-set labs on this AWS learning environment, the bootstrapping often seems to fail. Here I was supposed to log into the MySQL instance in the private subnet (whose VPC has no IGW) to connect via the application server. Nevermind, onto the next one!

So actually before doing this next lab, I had started another but as mentioned before, the AWS learning environment sometimes doesn't always deploy or requires workarounds for whatever reason. In this instance, the script was supposed to provide S3 bucket access to my IP (which I enter in the Cloud9 Terminal) but unfortunately this didn't work for whatever reason. Unable to proceed, I moved onto the next lab.

So in this lab, we're simply working implementing the ElastiCache service into our Elastic Beanstalk hosted app with the Aurora backend, yay for serverless architecture! As this was my very first exposure to ElastiCache, I was very happy to be familiarising with another AWS service.

The first thing was setting up all the necessary networking to allow the service to function. This meant first creating a subnet group for the ElastiCache cluster to sit within, allowing of course for high availability. The next bit was more fun as it was basically setting up the Python scripts to read from the Database cluster endpoint to the ElastiCache endpoint.

By virtue of having done a MySQL course, the query bit is pretty self explanatory and well, this much Python also is! Line 7 has the ElastiCache endpoint and line 11 defines the Database endpoint we're pulling from. The lab then has us pretty much add, modify and delete records whilst testing each time the caching feature. Worth noting as you can see below the Python code clears the cache with any action taking into the database.

Then out of the blue in the lab scenario, a fictional colleague has updated the application in our infrastructure so now it's my job to update the Docker image. After entering the code for the relevant endpoints for the ElastiCache service however. Anyway, it gives me a little bit of insight into some JavaScript which is only good. So I'm working DevOps now huh? :)

The final bit of the lab is pushing the image into ECR which you can see below.

Wait - no that's not true, there was one further bit I had to action in the AWS console.

Wait - no that's not true, there was one further bit I had to action in the AWS console.

As the updated application written in Java introduced a environmental variable for the Elasticache service, I had to go input this into my Elastic Beanstalk environment.

So, compared to yesterday I found this lab far more rewarding. Really nice to see how you use code and the native Cloud services to build serverless infrastructure - this felt like a task you could be asked to do in a live production environment, loved it. Unfortunately my next set of labs involve more network topologies, but despite the faff of doing it via the console it helps solidify the most robust architecture principles in your head, so it's all part of the journey.