CLI Fundamentals for the Cloud

Operating a computer with BASH is the reality the end-user faced prior to Window's graphical user interface. In the end however, what we're actually doing is rather much the same. By deploying actions via a CLI, we're empowered in our agency in what we can schedule and automate in the environment.

Ensuring we're starting off at the right directory is key, for that we use;

pwd

This outputs are current directory which informs us were we're currently sitting at in the system.

A basic rule of command syntax is that is delivered in below format;

command -> option -> argument

eg.

sudo usermod -a -G Sales arosalez

sudo usermod -> -a -G -> Sales arosalez

The most basic commands revolve around providing quick user or system data.

I won't elaborate on the most of these as they are either ubiquitous or pretty self evident.

ls, cd, whoami, id, hostname, uptime, date

cal, clear, echo, history, touch, cat

Don't forget to use the tab autocomplete function when inputting CLI commands, it should save you lots of time!

When you create users in Linux, by default they are included on the /etc/passwd file.

You can use the head or tail command to display either the first or last 10 lines of a file. One of the options of a head or tail command is -n, which lets us determine how many lines we display. So in the example we wanted to print only the first 3 lines of the passwd file, we'd enter the following;

head -n 3 /etc/passwd

Moving forward quickly, useradd is another vital command with some handy options such as determining the users home directory path -d, adding an account expiration date -e and even a comment -c feature usually used to house a users full name.

If we want to check we've successfully added a user onto our Linux machine, we can use the grep command. This command searches within documents again with the command/option/argument syntax we saw above. If I want to want to check if SgtSunshine is a user, I'd input;

grep SgtSunshine /etc/password

Other related commands to users are passwd and userdel. But users are only the atoms of our building blocks as we'll be predominately utilising groups to manage user permissions making groupadd, groupmod and groupdel completely essential. Another powerful related command is gpasswd used to administer the /etc/group file allowing for users to be added -a or deleted -d with members set with -M option and administrators by -A.

The building blocks of users and groups boils down to user permission levels. Fundamental to this is the root user, which has complete access over a computer systems, highlighting the need to create a standard user with the right privileges to avoid any unauthorized access across the system.

By also using the /etc/sudoers file, we can however issue permissions for specific users or groups to use particular commands via the visudo command. As a user with these permissions, we utilize the sudo (delegated permissions) command as it's safer then using su (full administrative permissions) where we would need to know the administrator password! If we want to actually list our sudo permissions we'd enter;

sudo -lu

This is extremely handy when troubleshooting if a command isn't working for you. Moreover, the use of the sudo command is also automatically logged at /var/log/messages, meaning it's usage can be adequtely monitored.

When we want to edit files in the command line, there are two popular text editors that work directly within the shell, vim and nano (the latter may need installation into debian/ubuntu distributions - sudo apt-get install nano). Now because of the excellent Vimtutor mode, I won't spend much time reviewing the commands here or the interaction between command and insert mode. However below I've briefly listed how we enter each editor;

vi <filename>

nano <filename>

Nano is much more straight forward with CTRL+<key> being used for the majority of options. Whilst Vim operates much more inline with a CLI with commands like forced quit without saving :q! and forced save and quit :wq! commands.

A further core element of the CLI is utilising it to get around the file system. Below I've listed some of the core directories commonly used in Linux distributions;

/ root of file system

/boot boot files and kernal

/dev devices

/etc configuration files

/home standard user's home dir

/media removeable media

/mnt network drives

/root root user home dir

/var log files, print spool, network services

As mentioned at the very beginning of this post, the ls command is one of the most commonly used CLI commands as it essentially lists the files & folders listed in our current directory. This command has a number of options -l for the long format, which allows us importantly to view permissions. -a show us the hidden files, -R shows us the subdirectories within and it's also possible to sort by extension, file size, modification time and ver number, but I haven't listed these here.

To view files, it's usual to just deploy the cat command. But in the case of exploring log files, for example, which are likely to contain more lines of text then could fit in our entire command line, it makes more sense to use the head, tail, more or less commands to determine the way in which we will most optimally view view the file. Like many, if not all Linux commands the aforementioned, come each with their own bespoke set of options to most accurately execute your desire.

Very interestingly for me as a Windows user for all my computing life, Linux treats everything as a file! And I mean commands, devices, directories and documents - EVERYTHING. Plus, unlike Windows, it treats everything case sensitive, so this is always worth paying close attention to.

Like the Windows shortcut for copy pasting (CTRL+C, CTRL+P) to copy a file or directory you simply need to use cp <file-name> <destination> to do so. If we want to then remove the copied file, we'd use rm command instead. However, very usefully, to avoid removing a directory with files within, you're required to utilise the additional option feature of the command line to achieve this.

mkdir makes a directory and can make multiples without an option. But if you use the -p option, you can make multiple nested directories at once which is pretty useful;

mkdir -p /home/user/dir1/dir2

Now to move a file/dir we follow a similar pattern to cp. We use mv <option> <destination>. Again there are a flurry of different options to ensure we don't encounter a number of issues, having to rename the file with an additional command and avoiding overwriting existing files as an example. rmdir is a specific command, which is practically an equivalent to rm -d.

The command I've now got a twitch reaction to inputting the moment I SSH into an EC2 instance is pwd. This just confirms where you are in the file directory structure, really important as it will determine the outcomes of your commands.

A concept which isn't unique to the Linux file system however is the use of absolute and relative paths. Simply the distinction here is that the absolute path is authoritative, it leads straight from the root directory. This idea is familiar to me as when building this simple website, I would use relative hyperlinking from within the directory the webpage sat within.

Building on working with the file system within Linux, we have an additional bundle of commands to work with;

- hash: lists the history of programs and commands executed from within the CLI.

- cksum: verifies a file has not changed, can be used to check downloaded files too.

- find: searches for files with additional options of specific criteria like file name: size and owner. Can also be additionally deployed with wildcards to enhance it's operation too. It has options to automatically delete the returned list -delete or even execute a determined command straight away -exec <command>.

- grep: looks inside the content of a file to assess if there is a text pattern that matches your desired input. It also is powerful enough to search files within a directory grep <string being searched> <directory path>, making it really useful.

- diff: used whenever you wish to find out the difference between two different files quickly.

- tar: turns multiple files into a single file. To later extract a .tar file, you use -x option. To get this .tar extension however, you may also wish to use the -f option, to specify it!

- gzip: can only compress one single file, so is usually used with the tar command.

- zip: can compress multiple files and even directory hierarchies.

- unzip: decompresses the contents of a file.

The principle of least privilege.The principle that a security architecture should be designed so that each entity is granted the minimum system resources and authorizations that the entity needs to perform its function.

read - allows a user to access the file.write - allows a user to access & edit the file.

execute - allows a user to run a script. If you use Windows, you can think of this as a .exe. It also allows users to view a file, so can be considered a higher level of access then just 'read'.

The command to view the file list displaying permissions in this way is ls -l.

The above slide also shows how to make the symbolic command determine between the individual user or by the group - the latter is also vital as it allows us to manage user access levels most effectively. However, there is an easier option within the chmod command to employ associating permissions with files. Using numbers as the options, we can easily determine what permissions we wish to employ.

|

0 |

- -

- |

no access |

|

1 |

- - x |

execute

only |

|

2 |

- w - |

write

access only |

|

3 |

- w x |

write and

execute |

|

4 |

r - - |

read only |

|

5 |

r - x |

read and

execute |

|

6 |

r w - |

read and

write |

|

7 |

r w x |

read,

write and execute (full access) |

usermod -c Dev user maureliususermod -c "Dev user" maurelius

* eg. find *.txt documents/

^ Will return any file with a .txt extension.

? eg. find tex??.txt documents/

^ Will return any file matching, thus here would return potentially text1.txt, text2.txt but not text.txt as it's looking for two values in this example.

uptime > info.txt^ So above, we see that I first output the computer's uptime information, and then appended the host name information with the following inputs.

hostname >> info.txt

With this tool, we can simply pipe in a further command;

ps -ef | grep sshd^ the above command displays all processes, however is then piped to filter the process we're wanting to check on, in the example above, sshd. You can then also use top to check mem/cpu usage, again similar to the Windows Task Manager.

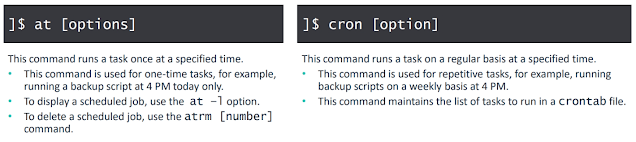

The most popular method to do this is with the at and cron job commands.

cron is more powerful, as it's a scheduler of tasks (the kind of thing we would use with logs, which I will mention soon!).

The little cheat sheet above shows us the clever format used by the command to determine when we'd want to run particular tasks. To further illustrate the point, I've included two examples below to further illuminate the concepts;

0 1 * * 1-5 /scripts/script.sh

Worth checking your system time is accurate eh! 😁 Another example;

*/10 * * * * /scripts/script.sh^ the above syntax now executes every 10 minutes! Using the slash operator function.

• Scripts also help us by providing documentation (if best practice is in place) elaborating on the purpose and actions being undertaken,

• Running a script requires proper permissions. That's why we talked about it alot above!

#!/bin/bash

echo "What is your name?"

read name

echo £Hello $name"

In essence, each of these represent different kind of package managers (bar the last of course[!]). They manage how we install, update, remove and inventory the software installed on our computer.