Storage in the AWS Cloud + AWS Transfer Family

Storage is one of the most critical component of cloud computing.

The main AWS Cloud storage services are grouped into four categories:

- Block storage –

- Amazon Elastic Block Store (Amazon EBS) provides highly available and low-latency block storage capabilities to workloads that require persistent storage that is accessible from an Amazon Elastic Compute Cloud (Amazon EC2) instance.

- Object storage – Two services fall in this category.

- Amazon Simple Storage Service (Amazon S3) is designed to store objects of any

- type in a secure, durable, and scalable way, and make them accessible over the internet.

- Amazon Simple Storage Service Glacier provides low-cost and highly durable object storage for long-term backup and archive of any type of data.

- File storage – Two services support storing data at the file level.

- Amazon Elastic File System (Amazon EFS) provides a simple, scalable, elastic file system for Linux-based workloads.

- Amazon FSx for Windows File Server provides a fully managed native Microsoft Windows file system to support Windows applications that run on AWS.

- Hybrid cloud storage – AWS Storage Gateway provides a link that connects your on-premises environment to AWS Cloud storage services in a resilient and efficient manner.

Amazon Elastic Block Store (EBS) offers block-level storage for Amazon EC2 instances, behaving like virtual disks in the cloud. There are three types: General Purpose (SSD), Provisioned IOPS (SSD), and Magnetic, each with varying performance and cost options. EBS volumes persist independently of instance life, supporting features like replication, transparent encryption, elastic volumes, and backup through snapshots within the same Availability Zone. Choose the right type based on your application's needs for optimal storage performance and cost efficiency.

Changing one character in a 1-GB file, for example, is more efficient with block storage as only the block containing the character needs updating. This is crucial for understanding storage differences, where block storage is faster with lower bandwidth usage but higher cost than object-level storage.

Amazon EBS provides persistent block-storage volumes for EC2 instances, ensuring data retention after power off. EBS volumes are automatically replicated within their Availability Zone for high availability and durability. They offer consistent low-latency performance and flexible scalability, allowing users to adjust resources and pay only for what they provision.

Amazon EBS allows users to create and attach individual storage volumes to EC2 instances, offering durable, block-level storage with automatic replication within the same Availability Zone. These volumes, functioning like external hard drives, provide low-latency access directly to instances and are suitable for various uses, including boot volumes, data storage, databases, and enterprise applications. EBS supports creating point-in-time snapshots for enhanced data durability and disaster recovery. Encrypted volumes incur no additional cost, and encryption happens on the Amazon EC2 side, ensuring secure data transit within AWS data centers. EBS volumes offer flexibility, enabling capacity increase, type changes (HDD to SSD), and dynamic resizing without instance downtime. Additionally, snapshots can be shared or copied across different AWS Regions for extended disaster recovery protection.

Amazon EBS offers snapshot backups to Amazon S3 for durable recovery, with costs calculated per GB-month. Inbound data transfer is free, while outbound data transfer incurs tiered charges. Volume storage costs are based on the provisioned amount in GB per month. IOPS (Input/Output Operations Per Second) are included in General Purpose (SSD) volumes' price, while Magnetic volumes and Provisioned IOPS (SSD) are charged based on the number of requests or provisioned IOPS, respectively.

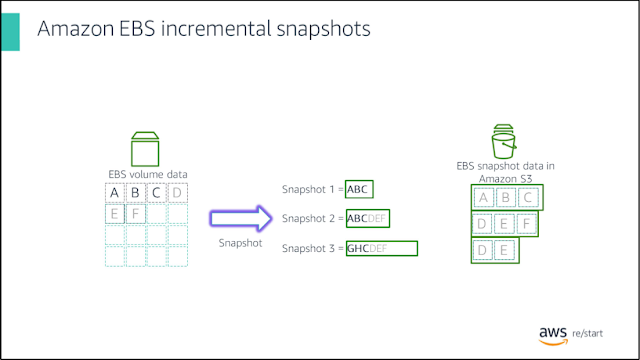

EBS provides various volume types catering to different workloads, categorized into SSD (Provisioned IOPS, General Purpose) and HDD (Throughput Optimized, Cold). Taking regular snapshots is crucial for data protection, allowing restoration in case of hardware failure or accidental deletion. Snapshots copy block-level data to Amazon S3, with redundancy in two facilities. The initial snapshot captures the full disk state, while subsequent ones only record the differences (deltas). Incremental snapshots mean only changed blocks are copied, optimizing storage and transfer efficiency.

Amazon Data Lifecycle Manager (Amazon DLM) automates EBS volume snapshot management, ensuring efficient backup processes. It simplifies creation, retention, and deletion, promoting regular backups, compliance, and reduced storage costs. To use Amazon DLM, tag your EBS volume and create a lifecycle policy with core settings:

- Resource Type: EBS volumes are the AWS resource managed by the policy.

- Target Tag: A specified tag associated with an EBS volume for policy management.

- Schedule: Defines snapshot frequency and retention limits. Snapshots start within an hour of the designated time. Excess snapshots trigger the deletion of the earliest one.

Amazon Elastic File System (EFS) is an AWS fully managed service offering scalable file storage.It boasts petabyte-scale storage, low-latency access, and compatibility with all Linux-based Amazon Machine Images for EC2. With EFS elastic and dynamic scalability, EFS ensures applications have the necessary storage required to function without disruption, making it a straightforward choice for big data, analytics, and various other workloads.

Amazon EFS offers performance control through attributes like Performance Mode (General Purpose and Max I/O), Storage Class (Standard and Infrequent Access), and Throughput Mode (Bursting Throughput and Provisioned Throughput).

Performance Mode:

- General Purpose is recommended for most EFS file systems, suitable for latency-sensitive use cases like web serving and content management.

- Max I/O mode allows higher throughput and operations per second, beneficial for highly parallelized applications like big data analysis.

Storage Class:

- Standard is for active workloads with frequently accessed files.

- Infrequent Access is a cost-optimized option for long-lived, infrequently accessed files.

- Bursting Throughput, the default mode, scales throughput as the file system size grows in the standard storage class.

- Provisioned Throughput allows instant throughput provisioning independent of stored data, enabling growth beyond Bursting Throughput limits.

It's important to note that the Performance Mode cannot be changed after creating a file system.

Amazon EFS offers cloud-based file storage that allows the creation of file systems, mounting on Amazon EC2 instances, and concurrent access through NFSv4 protocol in a VPC.

The diagram displays a VPC with three Availability Zones, each containing a dedicated mount target. It is advisable to access the file system through a mount target within the same Availability Zone. Notably, one of the Availability Zones includes two subnets, but a mount target is established in only one of these subnets.

Key use cases for EFS include:

- Home Directories:

Enables storage for organizations with multiple users accessing and sharing common datasets. - Enterprise Applications:

Serves as a scalable, elastic, available, and durable file store for enterprise applications, facilitating migration from on-premises to AWS. - Application Testing and Development:

Provides a common storage repository for development environments, allowing secure code and file sharing. - Database Backups:

Offers a standard file system easily mounted with NFSv4 for database servers, facilitating portable backups with native tools. - Web Serving and Content Management:

Delivers a durable, high-throughput file system for content management systems supporting applications like websites and online publications. - Big Data Analytics:

Supports high-throughput big data applications with scalability, read-after-write consistency, and low-latency file operations. - Media Workflows:

Ideal for media workflows, including video editing, studio production, and broadcast processing, offering shared storage, high throughput, and data consistency.

Amazon EFS allows concurrent access from EC2 instances in multiple Availability Zones, enhancing scalability and enabling collaborative usage across different applications and scenarios.

Amazon S3 Glacier is a cost-effective and secure data archiving service with 11 9s (ie. 99.999999999%) of durability for stored objects. Its pricing is region-based, making it ideal for long-term archiving. Interactions with objects in Glacier can be done through Amazon S3 Lifecycle policies or the Amazon S3 Glacier API. The Lifecycle policy automates the transition of objects to Glacier storage class, while the API allows for more manual control.

Objects stored in Amazon S3 Glacier cannot be directly accessed via the Glacier API but are managed by Amazon S3 on behalf of the user. Archival files can be restored using the Amazon S3 console or the S3 API. Uploading, retrieving, and managing files on Glacier is also possible through the Amazon S3 Glacier API, with retrieval being an asynchronous process initiated by a job. The Initiate Job API operation is used for archive retrieval, allowing users to retrieve single or multiple archives based on criteria like creation date or byte range.

Users can monitor archive retrieval completion through the Describe Job API or receive notifications via Amazon SNS. We can also use Glacier Select for analytics on archive data without the need to restore an archive first. Data encryption is a crucial aspect of Glacier, as it automatically encrypts archived data.

Amazon S3 is a regionally provisioned object storage service known for durability and high availability. It uses buckets to store objects, each identified by a unique key. Amazon S3 offers various storage classes, including Standard, Intelligent-Tiering, Standard-Infrequent Access, and Glacier, tailored to different use cases. Advantages of Amazon S3 include the ability to create and name buckets, store a vast amount of data in objects with unique keys, and download data at any time. Permissions can be granted or denied to users for uploading and downloading data, and authentication mechanisms enhance data security. Standard interfaces like REST and SOAP are supported.

Amazon S3 provides a versioning feature for object protection against accidental overwrites and deletes. Versioning can be enabled at the bucket level, assigning a unique version ID to each object. Versioning states include disabled (default), enabled, and suspended, each affecting how object versions are managed and retrievable. Once versioning is enabled on a bucket, it cannot be disabled but can be suspended.

Amazon S3 Intelligent-Tiering is a storage class designed for cost optimization by automatically moving data between access tiers based on access patterns. It utilizes two tiers: one optimized for frequent access and another lower-cost tier for infrequent access. This storage class is suitable for long-lived data with unpredictable or unknown access patterns, aiming to minimize costs without sacrificing performance.

Amazon S3 object lock follows the write once, read many (WORM) model, allowing users to prevent object deletion or overwrite for a specified period or indefinitely. This feature helps meet regulatory requirements for WORM storage and adds an extra layer of protection against changes or deletions.

Object lock in Amazon S3 offers two methods of managing retention: retention periods and legal holds. A retention period sets a fixed time during which an object remains WORM-protected, preventing modifications or deletions. On the other hand, a legal hold provides similar protection but with no expiration date, remaining in place until explicitly removed. Objects can have both retention periods and legal holds, either individually or in combination.

AWS Storage Gateway facilitates hybrid scenarios by connecting on-premises environments to the AWS Cloud, allowing for a combination of on-premises and cloud storage. It provides three interfaces: File, Volume, and Tape, each offering different approaches to access cloud-based storage.

- File Gateway: This interface stores and retrieves information as objects in Amazon S3, utilizing a local cache for faster access. It is suitable for scenarios where data needs to be seamlessly integrated between on-premises and S3.

- Volume Gateway: Designed for on-premises systems using iSCSI, Volume Gateway provides a mechanism to link on-premises storage to Amazon S3. It enables the storage of volumes in the cloud.

- Tape Gateway: Tape Gateways extend local tape backup libraries to the cloud, with storage backed by Amazon S3. This interface allows for the integration of tape-based backups with the cloud.

- AWS Transfer Family: This fully managed service allows the direct transfer of files into and out of Amazon S3 using various protocols, providing flexibility and ease of use.

- AWS DataSync: This data transfer service simplifies, automates, and accelerates the movement and replication of data between on-premises storage systems and AWS storage services. It supports transfers over the internet or through AWS Direct Connect.

- AWS Snowball: Addressing large-scale data transfer challenges, Snowball is a petabyte-scale data transport solution. It utilizes secure appliances to facilitate the transfer of large amounts of data into and out of the AWS Cloud, providing a reliable and efficient solution for massive data transfers.

The diagram shows a high-level view of the DataSync architecture for transferring files between on-premises storage and AWS storage services. Using AWS DataSync involves several steps:

- Deployment: Deploy the DataSync agent on-premises.

- Connection: Connect the agent to a file system or storage array using the Network File System (NFS) protocol.

- Selection: Choose Amazon EFS or Amazon S3 as your AWS storage and initiate the data transfer.

DataSync utilizes an on-premises software agent that connects to existing storage systems via the NFS protocol, ensuring encrypted data transfer with Transport Layer Security (TLS). The main use cases for DataSync include:

- Data Migration: Rapidly move active datasets over the network into Amazon S3, Amazon EFS, or Amazon FSx for Windows File Server, with automatic encryption and data integrity validation.

- Archiving Cold Data: Transfer cold data from on-premises storage to durable and secure long-term storage, such as Amazon S3 Glacier or Amazon S3 Glacier Deep Archive, freeing up on-premises storage capacity.

- Data Protection: Move data into any Amazon S3 storage class, selecting the most cost-effective option. Additionally, send data to Amazon EFS or Amazon FSx for Windows File Server for a standby file system.

- Data Movement for In-cloud Processing: Move data in and out of AWS for processing, accelerating hybrid cloud workflows in various industries like life sciences, media and entertainment, financial services, and oil and gas. This supports timely in-cloud processing for critical workflows such as machine learning, video production, big data analytics, and seismic research.

AWS Snowball is a petabyte-scale data transport solution for transferring large amounts of data to and from the AWS Cloud. It addresses challenges like high network costs, long transfer times, and security concerns. Users create a job in the AWS Management Console, and a Snowball appliance is shipped automatically. After attaching it to the local network, users run the Snowball client to select and transfer files to the appliance, which is then returned with an updated E-Ink shipping label. Security measures include tamper-resistant enclosures, 256-bit encryption, and a Trusted Platform Module for chain-of-custody. Three main use cases include transferring data from sensors or machines in edge locations, data collection in remote locations with connectivity constraints, and aggregating media and entertainment content.

Other AWS data transfer solutions mentioned include:

- AWS Transfer for SFTP: Enables direct file transfer to and from Amazon S3 using the Secure File Transfer Protocol (SFTP).

- AWS DataSync: Automates data movement between on-premises storage and Amazon S3 or Amazon EFS.